- Data Selection

- Active Learning

- Anomaly Detection Model

- Backpropagation

- Batch Normalization

- Bias Mitigation

- Concept Drift

- Confusion Matrix

- Convolutional Neural Network

- Data Augmentation

- Data Curation

- Data Handling

- Data Imputation

- Data Labeling for ML

- Data Preparation

- Data Profiling

- Data Quality

- Data Redundancy

- Data Scrubbing

- Data Wrangling

- Data-Centric AI

- Decision Tree

- Ensemble Learning

- Foundation Models

- Gradient Descent

- Hyperparameter Tuning

- Learning Rate

- LLM Training

- Machine Learning Life Cycle

- Machine Learning Workflow

- Model Accuracy in Machine Learning

- Model Maintenance

- Model Training

- Model Tuning

- Model Validation

- Named Entity Recognition (NER)

- Neural Network

- Noise in Machine Learning

- One Hot Encoding

- Outlier Detection

- Overfitting

- Precision and Recall

- Retraining Model

- Self-Supervised Learning

- Semantic Segmentation Models

- Text Classification

- Transfer Learning

- Unstructured Data

- Z-Score for Anomaly Detection

What is Data Selection?

In data mining, data selection is the process of identifying the best instruments for data collection along with the most appropriate data type and data source. It may also refer to the process of selecting specific samples, subsets, or data points in a data set for further analysis and processing.

Data selection should not be confused with selective data reporting, which occurs when a researcher excludes from their results data that fails to support a particular hypothesis. It’s also distinct from active and interactive data selection, which involves the use of collected data for monitoring or secondary analysis.

How Does Data Selection Work?

Data selection is a precursor to data collection, serving to guide and refine that process. Successful data selection generally requires that one define:

- The reason for data collection. Are they seeking to answer a research question, gain insights about customer behavior, or train a machine learning model?

- The scope of data that should be collected and whether any data should automatically be excluded from the collection process.

- Who is responsible for making selection decisions? Is it a single individual, a team, or a community? Are there any regulatory or legislative concerns that might impact collection?

- Technical aspects of the data which should be collected, including format and metadata.

- Any time, capital, and resource costs or constraints associated with data collection.

- The type of data that should be collected and the source it should be collected from.

- Data collection tools and methods.

Data Types and Data Sources

Generally speaking, there are two primary types of data:

- Quantitative data is concrete and measurable. It’s typically expressed in numerics. Examples include biometric markers, statistics, and measurements.

- Qualitative data is based on observation and interpretation. Examples include video footage, images, and raw text.

Neither type of data is necessarily superior to the other. Some projects may require collecting both qualitative and quantitative data, using the former to contextualize the latter.

Data sources are far more broad and may include, but are not limited to:

- Social media.

- Research publications.

- A survey or study.

- Live camera footage.

- Pre-existing data sets.

- Internal reports.

- Polls and/or interviews.

- Government or institutional records.

- Publicly-available information.

- Focus groups.

- Observations.

- Online databases.

When assessing your data sources, it’s important to consider how data will be preserved and stored and how you’ll differentiate between primary and secondary data. The former is raw data collected directly from a data source, while the latter has been processed according to your needs and requirements.

Common Data Selection Methods

Once an organization has broadly defined its target data type and source, the next step is to select the data sets that will be collected.

The methods you use at this stage depend largely on your reasons for collecting the data in the first place. For instance, if your goal is social sentiment analysis, you need only define your collection criteria – specific keywords and interactions that will flag a post as relevant. From there, it’s just a matter of setting up a tool that allows you to monitor and collect that information in real time .

If you’re selecting data for a machine learning model, things get somewhat more complicated. Once you’ve selected a data source, you’ll need to ensure that source:

- Does not contain redundant data.

- Is contextually relevant.

- Is unbiased.

- Sufficiently represents all edge cases and corner cases.

- Is varied enough to prevent overfitting.

- Meets any other data requirements your organization may have.

The above is typically determined through a process known as sampling. This involves selecting random entries from a data source in an effort to obtain a representative data set. This can be done in several different ways, including simple random sampling , stratified sampling, systematic sampling, and cluster sampling.

In some cases, it may even be more feasible to parse a larger data set down into a smaller, more representative sample

You might also consider incorporating machine learning into the sampling process. Typically, this will involve some combination of self-supervised learning and active learning with either diversity-based or uncertainty-based sampling. A side benefit of this approach is that it allows you to more readily automate your data pipeline, introducing new samples as needed.

Sampling also plays an important role in assessing data collected for research purposes.

Why is Data Selection Important?

Done right, data selection helps to ensure that collected data is:

- Valuable, relevant, and reliable.

- High quality.

- Representative.

Improper data selection, meanwhile, can lead to a multitude of issues, most notably data selection bias . Typically, the result of a flawed sample process, data selection bias returns inaccurate results by selecting non-random data samples for analysis. Other potential problems include data redundancy, unreliable or anomalous results, and the collection of low-quality or inaccurate data.

In short, data selection is an essential part of any data-focused initiative. Without a documented data selection procedure in place, you cannot guarantee the validity and reliability of any findings, even those generated by a machine learning model. Consequently, you also cannot guarantee that were you to act on that data; you would be making an informed decision, which would be to your overall benefit.

In the worst-case scenario, this could result in decisions that are actively harmful to your organization.

Common Data Selection Use Cases

Data selection and data collection are ultimately two sides of the same coin. In light of this, data selection may be applied to a wide range of different use cases. These include, but are not limited to:

- Pre-processing and/or selecting data sets to train machine learning models such as facial recognition, natural language processing, or computer vision tools.

- Feature selection as part of the process of building a predictive model.

- Performing scientific or market research.

- Analyzing customer behavior.

- Collecting data to assist in workflow or process optimization.

- Supporting data exploration as part of a larger data initiative.

- Identifying sensitive data that needs to be removed or redacted to comply with data privacy regulations.

- Performing database queries.

- Identifying and sanitizing redundant data present in databases or file systems.

Related Terms

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Field work I: selecting the instrument for data collection *

Joao luiz bastos, rodrigo pereira duquia, david alejandro gonzález-chica, jeovany martínez mesa, renan rangel bonamigo.

- Author information

- Article notes

- Copyright and License information

MAILING ADDRESS: João Luiz Bastos, Universidade Federal de Santa Catarina Trindade, 88040-970 - Florianópolis - SC, Brazil. E-mail: [email protected]

Received 2014 Jul 28; Accepted 2014 Jul 29; Issue date 2014 Nov-Dec.

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-Commercial License which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

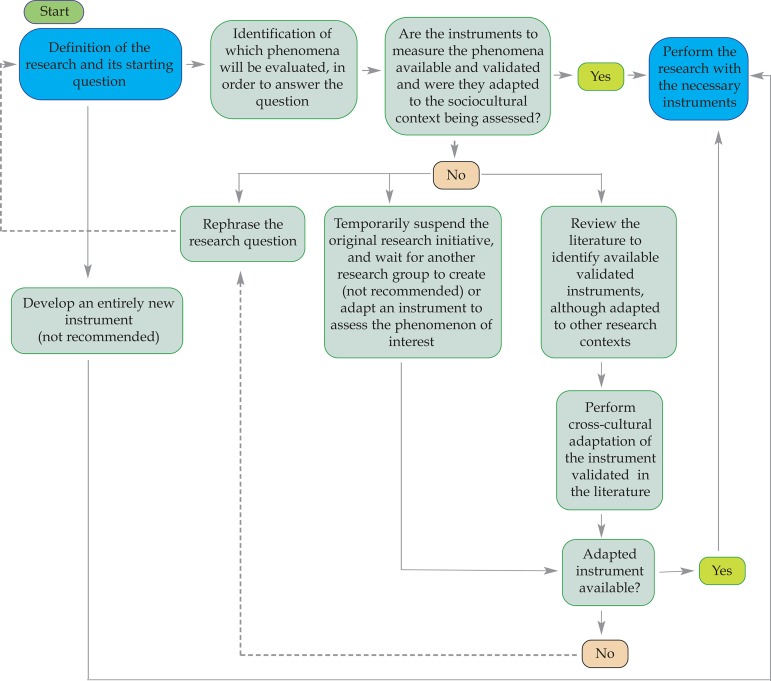

The selection of instruments that will be used to collect data is a crucial step in the research process. Validity and reliability of the collected data and, above all, their potential comparability with data from previous investigations must be prioritized during this phase. We present a decision tree, which is intended to guide the selection of the instruments employed in research projects. Studies conducted along these lines have greater potential to broaden the knowledge on the studied subject and contribute to addressing truly socially relevant needs.

Keywords: Data collection, Health surveys, Methods, Questionnaires, Review

INTRODUCTION

This article discusses one of the most trivial aspects of a researcher's daily tasks, which is to select among various available options the instruments to perform data collection that meet the intended objectives and, at the same time, respect budgetary and temporal restrictions as well as other equally relevant issues when conducting a research. The instrument for data collection is a key element of the traditional questionnaires, which are used to investigate various topics of interest among participants of scientific studies. It is through questionnaires / instruments aimed to assess, for example, sun exposure, family history of skin diseases and mental disorders, that it is possible to measure these phenomena and analyze their associations in health surveys. In this paper, we discuss only questionnaires and their elementary components - the instruments; the reader should refer to specialized literature for knowledge and proper management of other resources available for data collection, including, for example, equipment to measure blood pressure, exams on cutaneous surface lesions and collection of biological material in studies focused on biochemical markers, such as blood parameters etc. Even so, it is argued that the guiding principles presented in this text widely apply, with minor adaptations, to all data collection processes.

As discussed earlier, all scientific investigations, including those in the field of Dermatology, must start with a clear and predefined question. 1 Only after formulating a pertinent research question may the researcher and his/her team plan and implement a series of procedures, which will be able to answer such a question with acceptable levels of validity and reliability . This means that the scientific activity is organized by framing questions and executing a series of procedures to address them, including, for example, the use of questionnaires and their constituent instruments. Such procedures should be recognized as processes that respect ethical research guidelines and whose results are accepted by the scientific community, i.e. they are valid and reliable. However, it should be clarified before moving forward, albeit briefly and partially, what is commonly meant by validity and reliability in science.

In general, validity is considered to be present in an instrument, procedure or research as a whole, when they produce results that reflect what they initially aimed to evaluate or measure. 2 A research can be judged both in terms of internal validity when its conclusions are correct for that sample of studied individuals, as well as external validity, when its results can be generalized to other contexts and population domains. 3 For example, in a survey that estimates the frequency of pediatric atopic dermatitis in Southeast Brazil, the closer the results are to the examined subjects' reality, the greater their internal validity. In other words, if the actual frequency of atopic dermatitis were 12.5% for this region and population, a research that achieved a similar result would be considered internally valid. 4 The ability to generalize or extrapolate those results to other regions in the country would be reflected in the study's external validity. Furthermore, to be valid in any dimension this research should have used an established instrument, able to distinguish individuals who actually have this dermatological condition from those who do not have it. So, the study's validity research depends on the validity of the very instruments that are used.

A research instrument is deemed reliable when it is able to consistently generate the same results after being applied repeatedly to the same group of subjects. This concept is often used in multiple stages of the research process including, for example, when a data collection supervisor performs a quality control check, reapplying some questions to the same subjects already interviewed or even during the construction of a new instrument in the test-retest phase in which the reliability and consistency of the given answers are examined. The Acne-Specific Quality of Life Questionnaire was considered reliable after recording consistent data on the same individuals in an interval of seven days between the first and second administrations. 5 Moreover, a study will be more reliable as more precise instruments are used in data collection and as more subjects are recruited - studies with a significant number of participants present results with a smaller margin of error. It is noteworthy that, although the concept of reliability extrapolates the question of temporal consistency (test-retest), we will address this aspect in a more limited fashion in this article. The interested reader should consult specific publications for further discussion of this topic. 6

Resuming our original question, it must be noted that the need for careful selection of instruments to be used in scientific investigations must have a solid theoretical basis and should not be considered as a mere fad. Ultimately, the wrong choice of an instrument can compromise the internal validity of the study, producing misleading results, which are therefore unable to answer the research question originally formulated. Besides, the choice of an instrument also has implications in the ability to generalize the research results (external validity), and to compare them with those of other studies conducted nationally or internationally on the same subject - researchers using equivalent instruments can establish an effective dialogue, which enables a more comprehensive analysis of the phenomenon in question, including its antecedents and consequences. 7

In order to justify the need to carefully select the instruments to be used in scientific research and also provide basic guidelines so that these decisions are based on solid grounds, we will divide this article into the following sections: (1) On the comparative nature the of scientific research; (2) How to select the most appropriate instrument for my research when there are prototypes available in the scientific literature; and (3) What to do when there are no available instruments to assess the phenomenon of interest to the researcher.

ON THE COMPARATIVE NATURE OF SCIENTIFIC RESEARCH

The inherently comparative nature of scientific research represents an aspect that may sometimes pass unnoticed even to the more experienced researcher. However, the careful examination of a project's theoretical framework, the discussion of a scientific article and also the study results are sufficient to easily demonstrate this comparative nature.

Investigations in the field of Social Anthropology, for example, are based on comparisons of complex cultural systems; the identification of idiosyncrasies in a particular cultural system is only possible after its confrontation with the characteristics of another system. 8 So, the conclusion that a specific South American indigenous population exhibits distinct kinship relations from those observed in the hegemonic Western family composition only occurs when these two forms of cultural systems are compared.

The same occurs in the healthcare field - comparisons are crucial to arrive at conclusions, including the evaluation of consistency of certain scientific findings among a set of previously conducted studies. Likewise, if a researcher is interested in examining the quality of life of patients affected by the pain caused by lower-limb ulcers, he and his team should necessarily make comparisons.

In this case, the comparison is between two distinct groups of subjects with lower-limb ulcers, one of them with pain and the other without it, to ascertain whether the levels of quality of life found in both groups are similar or not. If the researcher observes, by comparison, that the group with pain has a diminished quality of life compared to the group without pain, he may conclude that there is a negative correlation between quality of life and pain related to lowerlimb ulcers.

However, the comparative principle goes beyond contrasting internal groups in a study, as illustrated above. Researchers of a particular subject, for example, the development of melanocytic lesions, can only confirm that the use of sunscreen prevents their occurrence, when multiple scientific studies evaluating this question have previously shown it. In other words, by comparing the results generated by several investigations on the same topic, the scientific community can judge the consistency of the findings and thus make a solid conclusion about the subject matter.

Considering that the comparison of results from different studies is a key aspect of the production and consolidation of scientific knowledge, the following question arises: How should one conduct scientific studies so that their results are comparable to each other? Invariably, the answer to this question includes the use of scientific research instruments that are valid, reliable, and equivalent in different studies. So, what are the basic elements of the selection and use of these tools that enables this scientific dialogue? This is exactly what the subsequent section aims to answer.

HOW TO SELECT THE MOST APPROPRIATE INSTRUMENTS FOR MY RESEARCH, WHEN THERE ARE PROTOTYPES AVAILABLE IN THE SCIENTIFIC LITERATURE

We assume that the researcher has already formulated a clear and pertinent research question, which he or she wants to answer by conducting a scientific research. To illustrate the situation, imagine that a researcher is interested in estimating the frequency of depression and anxiety in a population of caregivers of pediatric patients with chronic dermatoses. The research question could be worded specifically in this way: What is the frequency of anxiety and depression in caregivers of children under five years of age, with chronic dermatoses (atopic dermatitis, vitiligo and psoriasis) residing in the city of Porto Alegre in 2014?

Considering that the phenomenon to be evaluated is restricted to anxiety and depression, how should the investigator proceed in this regard? There are at least two possible alternatives: the researcher can develop a set of entirely original items (instrument) to measure both mental disorders cited or select valid and reliable instruments already available in the scientific literature to assess such disorders.

Both alternatives have their own implications. Developing a new instrument means conducting an additional research project that will require considerable effort and time to be carried out. The scientific literature on to the development and adaptation of instruments emphatically condemns this decision. 9 Often, researchers who choose to develop new instruments overestimate the deficiencies of the existing ones and disregard the time and effort needed to construct a new and appropriate prototype. In most cases, the optimistic and to some extent naive expectations of these researchers are frustrated by the development of a new instrument whose flaws are potentially similar to or even greater than the ones found in existing instruments, but with an additional aggravating factor: the possibility of comparing the results of a study performed with the newly developed instrument to those of previous studies employing other measuring tools is, at least initially, nonexistent. In general, we recommend developing new instruments only when there are no other options for measuring the phenomenon in question or when the existing ones have huge and confirmed limitations.

If the researcher has taken the (right) decision to use an existing instrument to assess anxiety and depression, we suggest that he or she should cover the following steps: 9

Conduct a very broad and thorough literature search to retrieve the instruments that assess the phenomenon in question. The bibliographic search can start in the traditional bibliographical resources in healthcare, such as PubMed, but it must also take in consideration those available in other scientific fields, such as psychology and education whenever necessary;

Identify all the available instruments to measure the phenomenon of interest. Eventually, some may not have been published in books, book chapters or as scientific articles. In these cases, it is essential to make contact with the researchers working in the area to ask them about the existence of unpublished measuring instruments (gray literature);

Based on the elements presented in Table 1 , reassess the course of development of each identified instrument, seeking to distinguish those with established results, good indicators of validity and reliability and in particular, those extensively used by the scientific community. 10 Ideally, the instruments of choice are those that were also evaluated by independent research groups, i.e., groups which were not involved with their initial design; and

Select an instrument that meets the goals of your study, considering ethical, budgetary and time constraints, among others. Whenever the chosen instrument has been created in a research context significantly distinct from that of your investigation, search the literature for studies of cross-cultural adaptation that aimed to produce an equivalent version of the instrument for the language and cultural specificities of your research context. 11 Thus, as argued by Reichenheim & Moares, "the process of cross-cultural adaptation should be a combination between a component of literal translation of words and phrases from one language to another and a meticulous tuning process, that addresses the cultural context and lifestyle of the target-population to which the version will be applied." 10

Aspects regarding validity and reliability (quality) of measurement instruments *

This Table was designed based on data published by Reichenheim & Moraes 10 and Streiner & Norman 9 that must be consulted if the reader wishes to advance further in these topics.

Proceeding as described above, the privileged scenario will be the one in which studies addressing the same phenomena shall be conducted with equivalent instruments to assess them and therefore their results will be readily comparable. This would be the same as having a study conducted in different countries on the topics of depression and anxiety in caregivers of pediatric patients with chronic skin diseases and each one would use a version of the instrument adapted for the respective research contexts. So, while in Brazil the Hospital Anxiety and Depression Scale would be used in a version adapted to Brazilian Portuguese, the equivalent version of this same instrument in Japanese would be used in Japan. Therefore, the rates of these common mental disorders, estimated by both studies would be directly comparable at the end of each survey.

WHAT TO DO WHEN THERE ARE NO AVAILABLE INSTRUMENTS FOR MEASURING THE PHENOMENON OF INTEREST TO THE RESEARCHER

Whenever the researcher is confronted with the lack of instruments for measuring the phenomenon of interest, it is possible to follow at least one of these leads:

Ultimately, review the research question and replace it with one that does not involve the assessment of the phenomenon for which there are no measurement tools available;

Develop an ancillary research program, whose main objective is to perform a cross-cultural adaptation of a measurement instrument to the context in which the investigation will be conducted. In this case, one must consider the need to postpone the original study until the adapted version of the instrument is available - something that takes in the most optimistic prediction, two to three years; or

Temporarily suspend the research initiative, waiting until other researchers have provided an adapted version of the selected instrument, making it possible to execute the study in a similar sociocultural context.

The synthesis of the entire process suggested in this article is illustrated in the decision tree, depicted in Figure 1 . We believe that the conduct of studies along these lines has an even greater potential to increase the knowledge on the particularities of any topic of interest and, ultimately, contribute to approaching socially relevant demands.

Decision tree to guide the process of choosing an instrument to collect scientific research data

Conflict of Interest: None

Financial Support: None

How to cite this article: Bastos JL, Duquia RP, González-Chica DA, Mesa JM, Bonamigo RR. Field work I: selecting the instrument for data collection. An Bras Dermatol. 2014;89(6):918-23.

Work performed at the Universidade Federal de Santa Catarina, Porto Alegre Health Sciences Federal University and Latin American Cooperative Oncology Group (LACOG) - Porto Alegre (RS), Brazil.

- 1. Martínez-Mesa J, González-Chica DA, Bastos JL, Bonamigo RR, Duquia RP. Sample size: how many participants do I need in my research? An Bras Dermatol. 2014;89:609–615. doi: 10.1590/abd1806-4841.20143705. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 2. Gil AC. Métodos e técnicas de pesquisa social. São Paulo: Atlas; 1999. [ Google Scholar ]

- 3. Pereira MG. Epidemiologia: teoria e prática. Rio de Janeiro: Guanabara Koogan; 2002. [ Google Scholar ]

- 4. Solé D, Camelo-Nunes IC, Wandalsen GF, Mallozi MC, Naspitz CK, Brazilian ISAAC Group Prevalence of atopic eczema and related symptoms in Brazilian schoolchildren: results from the International Study of Asthma and Allergies in Childhood (ISAAC) phase 3. J Investig Allergol Clin Immunol. 2006;16:367–376. [ PubMed ] [ Google Scholar ]

- 5. Kamamoto Cde S, Hassun KM, Bagatin E, Tomimori J. Acne-specific quality of life questionnaire (Acne-QoL): translation, cultural adaptation and validation into Brazilian-Portuguese language. An Bras Dermatol. 2014;89:83–90. doi: 10.1590/abd1806-4841.20142172. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 6. Carmines EG, Zeller RA. Reliability and validity assessment. In: Lewis-Beck MS, editor. Reliability and validity assessment. Thousand Oaks: SAGE; 1979. [ Google Scholar ]

- 7. Berry JW, Poortinga YH, Segall MH, Dasen PR. Cross-cultural psychology: research and applications. Cambridge: Cambridge University Press; 2007. [ Google Scholar ]

- 8. DaMatta R. Relativizando: uma introdução à antropologia social. Rio de Janeiro: Rocco; 1987. [ Google Scholar ]

- 9. Streiner DL, Norman GR. Health measurement scales: a practical guide to their development and use. 2nd ed. Oxford: Oxford University Press; 1998. [ Google Scholar ]

- 10. Reichenheim ME, Moraes CL. Qualidade dos instrumentos epidemiológicos. In: Almeida-Filho N, Barreto ML, editors. Epidemiologia & saúde: fundamentos, métodos e aplicações. Rio de Janeiro: Guanabara Koogan; 2012. pp. 150–164. [ Google Scholar ]

- 11. Reichenheim ME, Moraes CL. Operationalizing the cross-cultural adaptation of epidemiological measurement instruments. Rev Saúde Pública. 2007;41:665–673. doi: 10.1590/s0034-89102006005000035. [ DOI ] [ PubMed ] [ Google Scholar ]

- View on publisher site

- PDF (101.5 KB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

Velentgas P, Dreyer NA, Nourjah P, et al., editors. Developing a Protocol for Observational Comparative Effectiveness Research: A User's Guide. Rockville (MD): Agency for Healthcare Research and Quality (US); 2013 Jan.

Developing a Protocol for Observational Comparative Effectiveness Research: A User's Guide.

- Hardcopy Version at Agency for Healthcare Research and Quality

Chapter 8 Selection of Data Sources

Cynthia Kornegay , PhD and Jodi B Segal , MD, MPH.

The research question dictates the type of data required, and the researcher must best match the data to the question or decide whether primary data collection is warranted. This chapter discusses considerations for data source selection for comparative effectiveness research (CER). Important considerations for choosing data include whether or not the key variables are available to appropriately define an analytic cohort and identify exposures, outcomes, covariates, and confounders. Data should be sufficiently granular, contain historical information to determine baseline covariates, and represent an adequate duration of followup. The widespread availability of existing data from electronic health records, personal health records, and drug surveillance programs provides an opportunity for answering CER questions without the high expense often associated with primary data collection. If key data elements are unobtainable in an otherwise ideal dataset, methods such as predicting absent variables with available data or interpolating for missing time points may be used. Alternatively, the researcher may link datasets. The process of data linking, which combines information about one individual from multiple sources, increases the richness of information available in a study. This is in contrast to data pooling and networking, which are normally used to increase the size of an observational study. Each data source has advantages and disadvantages, which should be considered thoroughly in light of the research question of interest, as the validity of the study will be dictated by the quality of the data. This chapter concludes with a checklist of key considerations for selecting a data source for a CER protocol.

- Introduction

Identifying appropriate data sources to answer comparative effectiveness research (CER) questions is challenging. While the widespread availability of existing data provides an opportunity for answering CER questions without the high expense associated with primary data collection, the data source must be chosen carefully to ensure that it can address the study question, that it has a sufficient number of observations, that key variables are available, that there is adequate confounder control, and that there is a sufficient length of followup.

This chapter describes data that may be useful for observational CER studies and the sources of these data, including data collected for both research and nonresearch purposes. The chapter also explains how the research question should dictate the type of data required and how to best match data to the issue at hand. Considerations for evaluating data quality (e.g., demonstrating data integrity) and privacy protection provisions are discussed. The chapter concludes by describing new sources of data that may expand the options available to CER researchers to address questions. Recommendations for “best practices” regarding data selection are included, along with a checklist that researchers may use when developing and writing a CER protocol. To start, however, it is important to consider primary data collection for observational research, since the use of secondary data may be impossible or unwise in some situations.

- Data Options

Primary data are data collected expressly for research. Observational studies, meaning studies with no dictated intervention, require the collection of new data if there are no adequate existing data for testing hypotheses. In contrast, secondary data refer to data that were collected for other purposes and are being used secondarily to answer a research question. There are other ways to categorize data, but this classification is useful because the types of information collected for research differ markedly from the types of information collected for nonresearch purposes.

Primary Data

Primary data are collected by the investigator directly from study participants to address a specific question or hypothesis. Data can be collected by in-person or telephone interviews, mail surveys, or computerized questionnaires. While primary data collection has the advantage of being able to address a specific study question, it is often time consuming and expensive. The observational research designs that often require primary data collection are described here. While these designs may also incorporate existing data, we describe them here in the context of primary data collection. The need to use these designs is determined by the research question; if the research question clearly must be answered with these designs below, primary data collection may be required. Additional detail about the selection of suitable study design for observational CER is presented in chapter 2 .

Prospective Observational Studies

Observational studies are those in which individuals are selected on the basis of specific characteristics and their progress is monitored. A key concept is that the investigator does not assign the exposure(s) of interest. There are two basic observational designs: (1) cohort studies, in which selection is based on exposure and participants are followed for the occurrence of a particular outcome, and (2) case-control studies, where selection is based on a disease or condition and participants are contacted to determine a particular exposure.

Within this framework, there is a wide variety of possible designs. Participants can be individuals or groups (e.g., schools or hospitals); they can be followed into the future (prospective data collection) or asked to recall past events (retrospective data collection); and, depending on the specific study questions, elements of the two basic designs can be combined into a single study (e.g., case-cohort or nested case-control studies). If information is also collected on those who are either not exposed or do not have the outcome of interest, observational studies can be used for hypothesis testing.

An example of a prospective observational study is a recent investigation comparing medication adherence and viral suppression between once-daily and more-than-once daily pill regimens in a homeless and near-homeless HIV-positive population. 1 Adherence was measured using unscheduled pill-count visits over the six-month study period while viral suppression was determined at the end of the study. The investigators found that both adherence and viral suppression levels were higher in the once-daily groups compared to the more-than-once-daily groups. The results of this study are notable as they indicate an effective method to treat HIV in a particularly hard-to-reach population.

In the most general sense, a registry is a systematic collection of data. Registries that are used for research have clearly stated purposes and targeted data collection.

Registries use an observational study design that does not specify treatments or require therapies intended to change patient outcomes. There are generally few inclusion and exclusion criteria to make the results broadly generalizable. Patients are typically identified when they present for care, and the data collected generally include clinical and laboratory tests and measurements. Registries can be defined by specific diseases or conditions (e.g., cancer, birth defects, or rheumatoid arthritis), exposures (e.g., to drug products, medical devices, environmental conditions, or radiation), time periods, or populations. Depending on their purpose and the information collected, registry data can potentially be used for public health surveillance, to determine incidence rates, to perform risk assessment, to monitor progress, and to improve clinical practice. Registries can also provide a unique perspective into specialized subpopulations. However, like any long-term study, they can be very expensive to maintain due to the effort required to remain in contact with the participants over extended periods of time.

Registries have been used extensively for CER. As an example, the United States Renal Data System (USRDS) is a registry of individuals receiving dialysis that includes clinical data as well as medical claims. This registry has been used to answer questions about the comparative effectiveness and safety of erythropoiesis-stimulating agents and iron in this patient population, 2 the comparative effectiveness of dialysis chain facilities, 3 and the effectiveness of nocturnal versus daytime dialysis. 4 Another registry is the Surveillance, Epidemiology, and End Results (SEER) registry, which gathers data on Americans with cancer. Much of the SEER registry's value for CER comes from its linkage to Medicare data. Examples of CER studies that make use of this linked data include an evaluation of the effectiveness of radiofrequency ablation for hepatocellular carcinoma compared to resection or no treatment 5 and a comparison of the safety of open versus radical nephrectomy in individuals with kidney cancer. 6 A third example is a study that used SEER data to evaluate survival among individuals with bladder cancer who underwent early radical cystectomy compared to those patients who did not. 7

Secondary Data

Much secondary data that are used for CER can be considered byproducts of clinical care. The framework developed by Schneeweiss and Avorn is a useful structure with which to consider the secondary sources of data generated within this context. 8 They described the “record generation process,” which is the information generated during patient care. Within this framework, data are generated in the creation of the paper-based or electronic medical (health) record, claims are generated so that providers are paid for their services, and claims and dispensing records are generated at the pharmacy at the time of payment. As data are not collected specifically for the research question of interest, particular attention must be paid to ensure that data quality is sufficient for the study purpose.

A thorough understanding of the health system in which patients receive care and the insurance products they use is needed for a clear understanding of whether the data are likely to be complete or unavailable for the population of interest. Integrated health delivery systems such as Kaiser Permanente, in which patients receive the majority of their care from providers and facilities within the system, provide the most complete picture of patient medical care.

Electronic Health Record (EHR) Data

Electronic health records (EHRs) are used by health care providers to capture the details of the clinical encounter. They are chiefly clinical documentation systems. They are populated with some combination of free text describing findings from the history and the physical examination; results inputted with check-boxes to indicate positive responses; patient-reported responses to questions for recording symptoms or for screening; prefilled templates that describe normal and abnormal findings; imported text from earlier notes on the patient; and linkages to laboratory results, radiology reports and images; and specialized testing results (such as electrocardiograms, echocardiograms, or pulmonary function test results). Some EHRs include other features, such as flow sheets of clinical results, particularly those results used in inpatient settings (e.g., blood pressure measurements); problem and habits lists, electronic medication administration records; medication reconciliation features; decision support systems and/or clinical pathways and protocols; and specialty features for the documentation needs of specialty practices. The variables that might be accessible from EHR data are shown in Table 8.1 .

Data elements available in electronic health records and/or in administrative claims data.

As can be seen from the variable list, the details about an individual patient may be extensive. The method of data collection is not standardized and the intervals between visits vary for every patient in accordance with usual medical practice. Of note, medication information captured in EHRs differs from data captured by pharmacy claims. While pharmacy claims contain information on medications dispensed (including the national drug code [NDC] to identify the medication, dispensing date, days' supply, and amount dispensed), EHRs more typically contain information on medications prescribed by a clinician. Medication data from EHRs are often captured as part of the patient's medication list, which may include the medication name, order date, strength, units, quantity, and frequency. Again depending on the specific EHR system, inpatient medication orders may or may not be available but are not typically. As EHRs differ substantially, it is important to understand what fields are captured in the EHR under consideration, and to realize that completeness of specific fields may vary depending on how individual health care providers use the EHR.

An additional challenge with EHR data is that patients may receive care at different facilities, and information regarding their health may be entered separately into multiple systems that are not integrated and are inconsistent across practices. If a patient has an emergency room visit at a hospital that is not his usual site of care, it is unlikely to be recorded in the electronic medical record that houses the majority of his clinical information. Additionally, for a patient who resides in two or more cities during the year, the electronic medical record at each institution may be incomplete if the institutions do not share a common data system.

Paper-Based Records

Although time-intensive to access, the use of paper-based records is sometimes required. Many practices still do not have EHRs; in 2009, it was estimated that only half of outpatient practices in the U.S. were using EHRs. 10 Exclusion of sites without electronic records may bias study results because these sites may have different patient populations or because there may be regional differences in practice. These data may be particularly valuable if patient-reported information is needed (such as severity of pain, quality of symptoms, mental health concerns, and habits). The richness of information in paper-based records may exceed that in EHR data particularly if the electronic data is template driven. Additionally, paper-based records are valuable as a source of primary data for validating data that is available elsewhere such as in administrative claims. With a paper medical record, the researcher can test the sensitivity and specificity of the information contained in claims data by reviewing the paper record to see if the diagnosis or procedure was described. In that situation, the paper-based record would be considered the reference standard for diagnoses and procedures.

Administrative Data

Administrative health insurance data are typically generated as part of the process of obtaining insurance reimbursement. Presently, medical claims are most often coded using the International Classification of Disease (ICD) and the Common Procedural Terminology (CPT) systems. The ICD, Ninth Revision, Clinical Modification (ICD-9-CM) is the official system of assigning codes to diagnoses and procedures associated with hospital utilization in the United States. Much of Europe is using ICD-10 already, while the United States currently uses ICD-9 for everything except mortality data; the United States will start using ICD-10 in October 2013. 11 The ICD coding terminology includes a numerical list of codes identifying diseases, as well as a classification system for surgical, diagnostic, and therapeutic procedures. The National Center for Health Statistics and the Centers for Medicare and Medicaid Services (CMS) are responsible for overseeing modifications to the ICD. For outpatient encounters, the CPT is used for submitting claims for services. This terminology was initially developed by the American Medical Association in 1966 to encourage the use of standard terms and descriptors to document procedures in the medical record, to communicate accurate information on procedures and services to agencies concerned with insurance claims, to provide the basis for a computer-oriented system to evaluate operative procedures, and for actuarial and statistical purposes. Presently, this system of terminology is the required nomenclature to report outpatient medical procedures and services to U.S. public and private health insurance programs, as the ICD is the required system for diagnosis codes and inpatient hospital services. 12 The diagnosis-related group (DRG) classification is a system to classify hospital cases by their ICD codes into one of approximately 500 groups expected to have similar hospital resource use; it was developed for Medicare as part of the prospective payment system. The DRG system can be used for research as well, but with the recognition that there may be clinical heterogeneity within a DRG. There is no correlate of the DRG for outpatient care.

When using these claims for research purposes, the validity of the coding is of the highest importance. This is described in more detail below. The validity of codes for procedures exceeds the validity of diagnostic codes, as procedural billing is more closely tied to reimbursement. Understandably, the motivation for coding procedures correctly is high. For diagnosis codes, however, a diagnosis that is under evaluation (e.g., a medical visit or a test to “rule out” a particular condition) is indistinguishable from a diagnosis that has been confirmed. Consequently, researchers tend to look for sequences of diagnoses, or diagnoses followed by treatments appropriate for those diagnoses, in order to identify conditions of interest. Although Medicare requires an appropriate diagnosis code to accompany the procedure code to authorize payment, other insurers have looser requirements. There are few external motivators to code diagnoses with high precision, so the validity of these codes requires an understanding of the health insurance system's approach to documentation. 13 - 20 Investigators using claims data for CER should validate the key diagnostic and procedure codes in the study. There are many examples of validation studies in the literature upon which to pattern such a study. 18 , 21 - 22 Additional codes are available in some datasets—for example, the “present on admission” code that has been required for Medicare and Medicaid billing since October 2007—which may help in further refinement of algorithms for identifying key exposures and outcomes.

Pharmacy Data

Outpatient pharmacy data include claims submitted to insurance companies for payment as well as the records on drug dispensing kept by the pharmacy or by the pharmacy benefits manager (PBM). Claims submitted to the insurance company use the NDC as the identifier of the product. The NDC is a unique, 10-digit, 3-segment number that is a standard product identifier for human drugs in the United States. Included in this number are the active ingredient, the dosage form and route of administration, the strength of the product, and the package size and type. The U.S. Food and Drug Administration (FDA) has authority over the NDC codes. Claims submitted to insurance companies for payment for drugs are submitted with the NDC code as well as information about the supply dispensed (e.g., how many days the prescription is expected to cover), and the amount of medication dispensed. This information can be used to provide a detailed picture of the medications dispensed to the patient. Medications for which a claim is not submitted or is not covered by the insurance plan (e.g., over-the-counter medications) are not available. It should be noted that claims data are generally weak for medical devices, due to a lack of uniform coding, and claims often do not include drugs that are not dispensed through the pharmacy (e.g., injections administered in a clinic).

Large national PBMs, such as Medco Health Solutions or Caremark, administer prescription drug programs and are responsible for processing and paying prescription drug claims. They are the interface between the pharmacies and the payers, though some larger health insurers manage their own pharmacy data. PBM models differ substantially, but most maintain formularies, contract with pharmacies, and negotiate prices with drug manufacturers. The differences in formularies across PBMs may offer researchers the advantage of natural experiments, as some patients will not be dispensed a particular medication even when indicated, while other patients will be dispensed the medication, solely due to the formulary differences of their PBMs. Some PBMs own their own mail-order pharmacies, eliminating the local pharmacies' role in distributing medications. PBMs more recently have taken on roles of disease management and outcomes reporting, which generates additional data that may be accessible for research purposes. Figure 8.1 illustrates the flow of information into PBMs from health plans, pharmaceutical manufacturers, and pharmacies. PBMs contain a potentially rich source of data for CER, provided that these data can be linked with outcomes. Examples of CERs that have been done using PBM data include two studies that evaluate patient adherence to medications as their outcome. One compared adherence to different antihypertensive medications using data from Medco Health Solutions. The researchers identified differential adherence to antihypertensive drugs, which has implications for their effectiveness in practice. 23 Another study compared costs associated with a step-therapy intervention that controlled access to angiotensin-receptor blockers with the costs associated with open access to these drugs. 24 Data came from three health plans that contracted with one PBM and one health plan that contracted with a different PBM.

How pharmacy benefits managers fit within the payment system for prescription drugs. From the Congressional Budget Office, based in part on General Accounting Office, Pharmacy Benefit Managers: Early Results on Ventures with Drug Manufacturers. GAO/HEHS-96-45. (more...)

Frequently, PBM data are accessible through health insurers along with related medical claims, thus enabling single-source access to data on both treatment and outcomes. Data from the U.S. Department of Veterans Affairs (VA) Pharmacy Benefits Manager, combined with other VA data or linked to Medicare claims, are a valuable resource that has generated comparative effectiveness and safety information. 25 - 26

Regulatory Data

FDA has a vast store of data from submissions for regulatory approval from manufacturers. While the majority of the submissions are not in a format that is usable for research (e.g., paper-based submissions or PDFs), increasingly the submissions are in formats where the data may be used for purposes beyond that for which they were collected, including CER. Additionally, FDA is committed to converting many of its older datasets into research-appropriate data. FDA presently has a contractor working on conversion of 101 trials into useable data that will be stored in their clinical trial repository. 27 It also has pilot projects underway that are exploring the benefits and risks of providing external researchers access to their data for CER. It is recognized that issues of using proprietary data or trade-secret data will arise, and that there may be regulatory and data-security challenges to address. A limitation of using these trials for CER is that they are typically efficacy trials rather than effectiveness trials. However, when combined using techniques of meta-analysis, they may provide a comprehensive picture of a drug's efficacy and short-term safety.

Repurposed Trial Data or Data From Completed Observational Studies

A vast amount of data is collected for clinical research in studies funded by the Federal government. By law, these data must be made available upon request to other researchers, as this was information collected with taxpayer dollars. This is an exceptional source of existing data. To illustrate, the Cardiovascular Health Study is a large cohort study that was designed to identify risk factors for coronary heart disease and stroke by means of a population-based longitudinal cohort study. 28 The study investigators collected diverse outcomes including information on hospitalization, specifically heart failure associated hospitalizations. Thus, the data from this study can be used to answer comparative effectiveness questions about interventions and their effectiveness on preventing heart failure complications, even though this was not a primary aim of the original cohort study. A limitation is that the researcher is limited to only the data that were collected—an important consideration when selecting a dataset. Some of the datasets have associated biospecimen repositories from which specimens can be requested for additional testing.

Completed studies with publicly available datasets often can be identified through the National Institutes of Health institute that funded the study. For example, the National Heart Lung and Blood Institute has a searchable site (at https://biolincc.nhlbi.nih.gov/home/ ) where datasets can be identified and requested. Similarly, the National Institute of Diabetes and Digestive and Kidney Diseases has a repository of datasets as well as instructions for requesting data (at https://www.niddkrepository.org/niddk/jsp/public/resource.jsp ).

- Considerations for Selecting Data

Required Data Elements

The research question must drive the choice of data. Frequently, however, as the question is developed, it becomes clear that a particular piece of information is critical to answering the question. For example, a question about interventions that reduce the amount of albuminuria will almost certainly require access to laboratory data that include measurement of this outcome. Reliance on ICD-9 codes or use of a statement in the medical record that “albuminuria decreased” will be insufficiently specific for research purposes. Similarly, a study question about racial differences in outcomes from coronary interventions requires data that include documentation of race; this requirement precludes use of most administrative data from private insurers that do not collect this information. If the relevant data are not available in an existing data source, this may be an indication that primary data collection or linking of datasets is in order. It is recommended that the investigator specify a priori what the minimum requirements of the data are before the data are identified, as this will help avoid the effort of making suboptimal data work for a given study question.

If some key data elements seem to be unobtainable in an otherwise suitable dataset, one might consider ways to supplement the available data. These strategies may be methodological, such as predicting absent data variables with data that are available, or interpolating for missing time points. The authors recently completed a study in which the presence of obesity was predicted for individuals in the dataset based on ICD-9 codes. 29 In such instances, it is desirable to provide a reference to support the quality of data obtained by such an approach.

Alternatively, there may be a need to link datasets or to use already linked datasets. SEER-Medicare is an example of an already linked dataset that combines the richness of the SEER cancer diagnosis data with claims data from Medicare. 30 Unique patient identifiers that can be linked across datasets (such as Social Security numbers) provide opportunities for powerful linkages with other datasets. 31 Other methods have been developed that do not rely on the existence of unique identifiers. 32 As described above, linking medical data with environmental data, population-level data, or census data provides rich datasets for addressing research questions. Privacy concerns raised by individual contributors can greatly increase the complexity and time needed for a study with linked data.

Data linking combines information on the same person from multiple sources to increase the richness of information available in a study. This is in contrast to data pooling and networking , tools primarily used to increase the size of an observational study.

Time Period and Duration of Followup

In an ideal situation, researchers have easy access to low-cost, clinically rich data about patients who have been continuously observed for long periods of time. This is seldom the case. Often, the question being addressed is sensitive to the time the data were collected. If the question is about a newly available drug or device, it will be essential that the data capture the time period of relevance. Other questions are less sensitive to secular changes; in these cases, older data may be acceptable.

Inadequate length of followup for individuals is often the key time element that makes data unusable. How long is necessary depends on the research question; in most cases, information about outcomes associated with specific exposures requires a period of followup that takes the natural history of the outcomes into account. Data from registries or from clinical care may be ideal for studies requiring long followup. Commercial insurers see large amounts of turnover in their covered patient populations, which often makes the length of time that data are available on a given individual relatively short. This is also the case with Medicaid data. The populations in data from commercial insurers or Medicaid, however, are so large that reasonable numbers of relevant individuals with long followup can often be identified. It should be noted that when a study population is restricted to patients with longer than typical periods of followup within a database, the representativeness of those patients should be assessed. Individuals insured by Medicare are typically insured by Medicare for the rest of their lives, so these data are often appropriate for longitudinal research, especially when they can be coupled with data on drug use. Similarly, the VA health system is often a source of data for CER because of the relatively stable population that is served and the detail of the clinical information captured in the system's electronic records.

Table 8.2 provides the types of questions, with an example for each, that an investigator should ask when choosing data.

Questions to consider when choosing data.

- Ensuring Quality Data

When considering potential data resources for a study, an important element is the quality of the information in the resource. Using databases with large amounts of missing information, or that do not have rigorous and standardized data editing, cleaning, and processing procedures increases the risk of inconclusive and potentially invalid study results.

Missing Data

One of the biggest concerns in any investigation is missing data. Depending on the elements and if there is a pattern in the type and extent of missingness, missing data can compromise the validity of the resource and any studies that are done using that information. It is important to understand what variables are more or less likely to be missing, to define a priori an acceptable percent of missing data for key data elements required for analysis, and to be aware of the efforts an organization takes to minimize the amount of missing information. For example, data resources that obtain data from medical or insurance claims will generally have higher completion rates for data elements used in reimbursement, while optional items will be completed less frequently. A data resource may also have different standards for individual versus group-level examination. For example, while ethnicity might be the only missing variable in an individual record, it could be absent for a significant percentage of the study population.

Some investigators impute missing data elements under certain circumstances. For example, in a longitudinal resource, data that were previously present may be carried forward if the latest update of a patient's information is missing. Statistical imputation techniques may be used to estimate or approximate missing data by modeling the characteristics of cases with missing data to those who have such data. 33 - 35 Data that have been generated in this manner should be clearly identified so that they can be removed for sensitivity analyses, as may be appropriate. Additional information about methods for handling missing data in analysis is covered in chapter 10 .

Changes That May Alter Data Availability and Consistency Over Time

Any data resource that collects information over time is likely to eventually encounter changes in the data that will affect longitudinal analyses. These changes could be either a singular event or a gradual shift in the data and can be triggered by the organization that maintains the database or by events beyond the control of that organization including adjustments in diagnostic practices, coding and reimbursement modifications, or increased disease awareness. Investigators should be aware of these changes as they may have a substantial effect on the study design, time period, and execution of the project.

Sudden changes in the database may be dealt with by using trend breaks. These are points in time where the database is discontinuous, and analyses that cross over these points will need to be interpreted with care. Examples of trend break events might be major database upgrades and/or redesigns or changes in data suppliers. Other trend break events that are outside the influence of the maintenance organization might be medical coding upgrades (e.g., ICD-9 to ICD-10), announcements or presentations at conferences (e.g., Women's Health Initiative findings) that may lead to changes in medical practice, or high profile drug approvals or withdrawals.

More gradual events can also affect the data availability. Software upgrades and changes might result in more data being available for recently added participants versus individuals who were captured in prior versions. Changes in reimbursement and recommended practice could lead to shifts in use of ICD-9 codes, or to more or less information being entered for individuals.

Validity of Key Data Definitions

Validity assessment of key data in an investigation is an important but sometimes overlooked issue in health care research using secondary data. There is a need to assess not only the general definition of key variables, but also their reliability and validity in the particular database chosen for the analysis. In some cases, particularly for data resources commonly used for research, other researchers or the organization may have validated outcomes of health events (e.g., heart attack, hospitalization, or mortality). 36 Creating the best definitions for key variables may require the involvement of knowledgeable clinicians who might suggest that the occurrence of a specific procedure or a prescription would strengthen the specificity of a diagnosis. Knowing the validity of other key variables, such as race/ethnicity, within a specific dataset is essential, particularly if results will be described in these subgroups.

Ideally, validity is examined by comparing study data to additional or alternative records that represent a “gold standard,” such as paper-based medical records. We described in the Administrative Data section above how validity of diagnoses associated with administrative claims might be assessed relative to paper-based records. EHRs and non–claims-based resources do not always allow for this type of assessment, but a more accommodating validation process has not yet been developed. When a patient's primary health care record is electronic, there may not be a paper trail to follow. Commonly, all activity is integrated into one record, so there is no additional documentation. On the other hand, if the data resource pulls information from a switch company (an organization that specializes in routing claims between the point of service and an insurance company), there may be no mechanism to find additional medical information for patients. In those cases, the information included in the database is all that is available to researchers.

- Data Privacy Issues

Data privacy is an ongoing concern in the field of health care research. Most researchers are familiar with the Health Insurance Portability and Accountability Act (HIPAA), enacted in 1996 in part to standardize the security and privacy of health care information. HIPAA coined the term “protected health information” (PHI), defined as any individually identifiable health information (45 CFR 160.103). HIPAA requires that patients be informed of the use of their PHI and that covered entities (generally, health care clearinghouses, employer-sponsored health plans, health insurers, and medical service providers) track the use of PHI. HIPAA also provides a mechanism for patients to report when they feel these regulations have been violated. 37

In practical terms, this has resulted in an increase in the amount and complexity of documentation and permissions required to conduct healthcare research and a decrease in patient recruitment and participation levels. 38 - 39 While many data resources have established procedures that allow for access to data without personal identifiers, obtaining permission to use identifiable information from existing data sources (e.g., from chart review) or for primary data collection can be time consuming. Additionally, some organizations will not permit research to proceed beyond a certain point (e.g., beginning or completing statistical analyses, dissemination, or publication of results) without proper institutional review board approvals in place. If a non-U.S. data resource is being used, researchers will need to be aware of differences between U.S. privacy regulations and those in the country where the data resource resides.

Adherence to HIPAA regulations can also affect study design considerations. For example, since birth, admission, and discharge dates are all considered to be PHI, researchers may need to use a patient's age at admission and length of stay as unique identifiers. Alternatively, a limited data set that includes PHI but no direct patient identifiers such as name, address, or medical record numbers may be defined and transferred with appropriate data use agreements in place. Organizations may have their own unique limits on data sharing and pooling. For example, in the VA system, the general records and records for condition-specific treatment, such as HIV treatment, may not be pooled. Additional information regarding HIPAA regulations as they apply to data used for research may be found on the National Institutes of Health Web site. 40

- Emerging Issues and Opportunities

Data From Outside of the United States

Where appropriate, non-U.S. databases may be considered to address CER questions, particularly for longitudinal studies. One of the main reasons is that, unlike the majority of U.S. health care systems, several countries with single-payer systems, such as Canada, the United Kingdom, and the Netherlands, have regional or national EMR systems. This makes it much easier to obtain complete, long-term medical records and to follow individuals in longitudinal studies. 41

The Clinical Practice Research Datalink (CPRD) is a collection of anonymized primary care medical records from selected general practices across the United Kingdom. These data have been linked to many other datasets to address comparative effectiveness questions. An example is a study that linked the CPRD to the Myocardial Ischaemia National Audit Project registry in England and Wales. The researchers answered questions about the risks associated with discontinuing clopidogrel therapy after a myocardial infarction (performed when the database was called General Practice Research Database). 42

While the selection of a non-U.S. data source may be the right choice for a given study, there are a number of things to consider when designing a study using one of these resources.

One of the main considerations is if the study question can be appropriately addressed using a non-U.S. resource. Questions that should be addressed during the study design process include:

- Is the exposure of interest similar between the study and target population? For example, if the exposure is a drug product, is it available in the same dose and form in the data resource? Is it used in the same manner and frequency as in the United States?

- Are there any differences in availability, cost, practice, or prescribing guidelines between the study and target populations? Has the product been available in the study population and the United States for similar periods of time?

- What is the difference between the health care systems of the study and target populations? Are there differences in diagnosis methods and treatment patterns for the outcome of interest? Does the outcome of interest occur with the same frequency and severity in the study and target populations?

- Are the comparator treatments similar to those that would be available and used in the United States?

An additional consideration is data access. Access to some resources, such as the United Kingdom's CPRD, can be purchased by interested researchers. Others, such as Canada's regional health care resources, may require the personal interest of and an official association with investigators in that country who are authorized to use the system. If a non-U.S. data resource is appropriate for a proposed study, the researcher will need to become familiar with the process for accessing the data and allow for any extra time and effort required to obtain permission to use it.

A sound justification for selecting a non-U.S. data resource, a solid understanding of the similarities and differences of the non-U.S. versus the U.S. systems, as well as careful discussion of whether the results of the study can be generalized to U.S. populations will help other researchers and health care practitioners interpret and apply the results of non-U.S.-based research to their particular situations.

Point of Care Data Collection and Interactive Voice Response/Other Technologies

Traditionally, the data used in epidemiologic studies have been gathered at one point in time, cleaned, edited, and formatted for research use at a later point. As technology has developed, however, data collected close to the point of care increasingly have been available for analysis. Prescription claims can be available for research in as little as one week.

In conjunction with a shortened turnaround time for data availability, the point at which data are coded and edited for research is also occurring closer to when the patient received care. Many people are familiar with health care encounters where the physician takes notes, which are then transcribed and coded for use. With the advent of EHRs, health information is now coded and transcribed into a searchable format at the time of the visit; that is, the information is directly coded as it is collected, rather than being transcribed later.

Another innovation is using computers to collect data. Computer-aided data collection has been used in national surveys since the 1990s 43 and also in types of research (such as risky behaviors, addiction, and mental health) where respondents might not be comfortable responding to a personal interviewer. 44 - 46

The advantages of these new and timely data streams are more detailed data, sometimes available in real or near-real time that can be used to spot trends or patterns. Since data can be recorded at the time of care by the health care provider, this may help minimize miscoding and misinterpretation. Computerized data collection and Interactive Voice Response are becoming easier and less expensive to use, enabling investigators to reach more participants more easily. Some disadvantages are that these data streams are often specialized (e.g., bedside prescribing), and, without linkage to other patient characteristics, it can be difficult to track unique patients. Also, depending on the survey population, it can be challenging to maintain current telephone numbers. 47 - 48

Data Pooling and Networking

A major challenge in health research is studying rare outcomes, particularly in association with common exposures. Two methods that can be used to address this challenge are data pooling and networking. Data pooling is combining data, at the level of the unit of analysis (i.e., individual), from several sources into a single cohort for analysis. Pooled data may also include data from unanalyzed and unpublished investigations, helping to minimize the potential for publication bias. However, pooled analyses require close coordination and can be very difficult to complete due to differences in study methodology and collection practices. An example is an analysis that pooled primary data from four cohorts of breast cancer survivors to ask a new question about the effectiveness of physical activity. The researchers had to ensure the comparability of the definitions of physical activity and its intensity in each cohort. 49 Another example is a study that pooled data from four different data systems including from Medicare, Medicaid, and a private insurer to assess the comparative safety of biological products in rheumatologic diseases. The authors describe their assessment of the comparability of covariates across the data systems. 50 Researchers must be sensitive to whether additional informed consent of individuals is needed for using their data in combination with other data. Furthermore, privacy concerns sometimes do not allow for the actual combination of raw study data. 51

An alternative to data pooling is data networking, sometimes referred to as virtual data networks or distributed research networks. These networks have become possible as technology has developed to allow more sophisticated linkages. In this situation, common protocols, data definitions, and programming are developed for several data resources. The results of these analyses are combined in a central location, but individual study data do not leave the original data resource site. The advantage of this is that data security concerns may be fewer. As with data pooling, the differences in definitions and use of terminology requires that there be careful adjudication before the data is combined for analyses. Examples of data networking are the HMO Research Network and FDA's Sentinel Initiative. 52 - 54

The advantage of these methods is the ability to create large datasets to study rare exposures and outcomes. Data pooling can be preferable to meta-analyses that combine the results of published studies because unified guidelines can be developed for inclusion criteria, exposures, and outcomes, and analyses using individual patient level data allow for adjustment for differences across datasets. Often, creation and maintenance of these datasets can be time consuming and expensive, and they generally require extensive administrative and scientific negotiation, but they can be a rich resource for CER.

Personal Health Records

Although they are not presently used for research to a significant extent, personal health records (PHRs) an alternative to electronic medical records. Typically, PHRs are electronically stored health records that are initiated by the patient. The patient enters data about his or her health care encounters, test results, and, potentially, responses to surveys or documentation of medication use. Many of these electronic formats are Web-based and therefore easily accessible by the patient when receiving health care in diverse settings. The application that is used by the patient may be one for which he or she has purchased access, or it may be sponsored by the health care setting or insurer with which the patient has contact. Other PHRs, such as HealthVault and NoMoreClipboard, can be accessed freely. One example of a widely used PHR is MyHealth e Vet, which is the personal health record provided by the VA to the veterans who use its health care system. 55 MyHealth e Vet is an integrated system in which the patient-entered data are combined with the EHR and with health management tools.